2025: An Agentic Year?

2024 marked the first fiscal year where organizations could allocate budgets specifically for GenAI investments. Unsurprisingly, interest in exploring GenAI continued to surge.

Yes—exploring. Despite many vendor-driven predictions claiming that “exploration would move to production,” only a few organizations managed to cross that line. That said, I’ve been impressed by the speed at which some organizations have made strides in understanding and developing around this new technology.

The market isn’t waiting around for people to catch their breath. The buzz has already moved on to the next big wave: AI agents.

What is an AI Agent?

The concept of an AI agent is relatively straightforward: these are autonomous systems capable of performing specific tasks without human intervention.

The possibilities are virtually endless. Imagine booking your next trip simply by saying, “Find me a warm destination for one week in February, with direct flights from New York City. Budget for two people including flights and hotel: $2,000.” Minutes later, an AI agent could deliver your flight and hotel confirmations straight to your inbox.

From Zero-Shot to Autonomous Agents, Humans Quickly Kicked Out-of-the-Loop

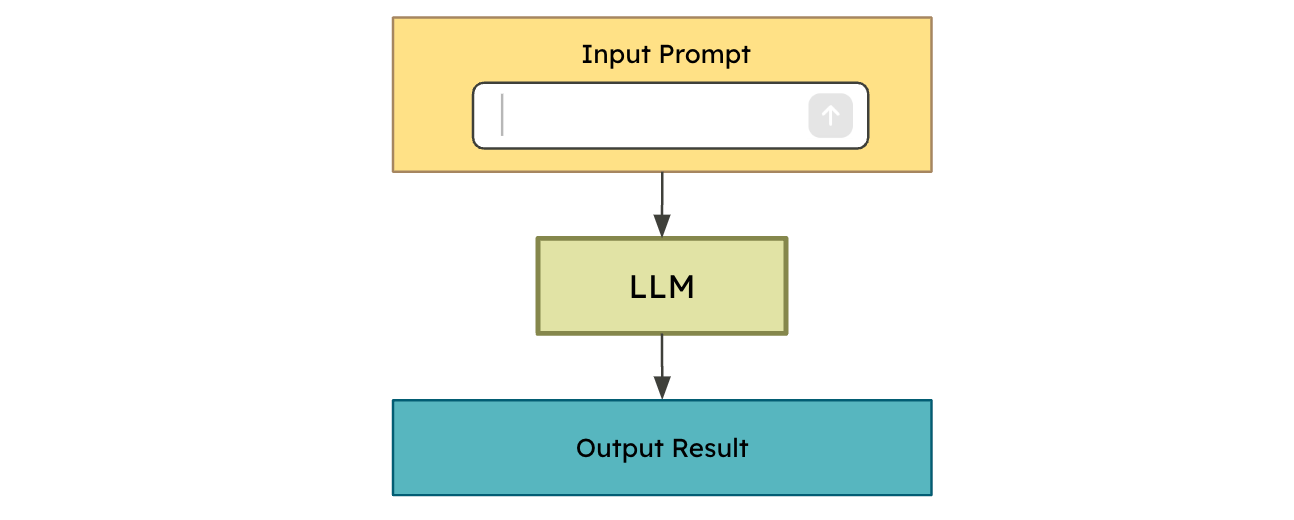

When large language models (LLMs) were initially rolled out to the public, they were (and largely still are) used in a straightforward manner:

Provide a prompt, e.g. write a request in natural language.

The LLM processes the prompt.

The result is returned.

Initially, these requests centered around generating "something"—a text-to-something process based solely on the model’s internal capabilities. That "something" could be a text, an image, a video, or other outputs.

While the results were often impressive, they were also imperfect. Challenges like hallucinations (answers deemed incorrect) became a key concern. Relying solely on the LLM’s “internal knowledge”, constructed during its training, has inherent limitations.

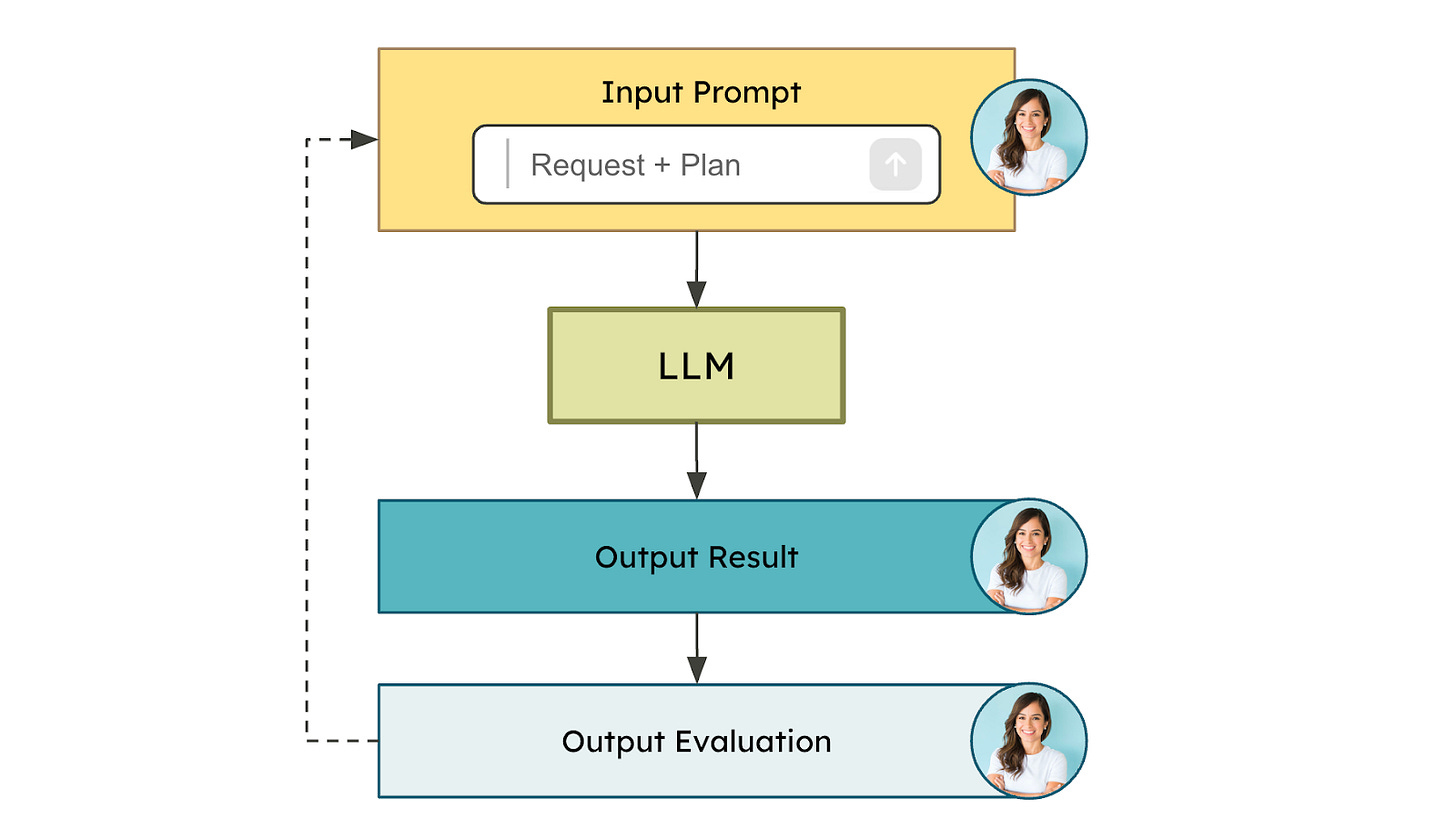

The most common technique—sending a single prompt and receiving a single response—is known as zero-shot prompting. Achieving consistently high-quality results with this approach is notoriously difficult, even for humans. Let’s be honest, I didn’t write this post in one go. I’ve paused, re-read, scrapped sections, and rewritten others. I wish I could say otherwise.

Over the past few months, new ideas, research, and solutions have emerged to improve the performance of LLMs when handling requests in natural language:

Advanced prompting techniques, like chain-of-thought prompting providing intermediate reasoning steps.

Retrieval-Augmented Generation (RAG) has become widely adopted, enabling models to provide relevant, up-to-date context for each request.

LLMs have been enhanced with Tool use, referring to their ability to interact with external functions or APIs to gather information, perform actions, or manipulate data.

Taking my travel request as an example, you can imagine the benefits of these elements. On top of being able to search the web and make reservations, the solution also needs to navigate a complex combination of constraints—weather, flight availability, hotels, time, budget, and the number of travelers.

In a classic non-agentic workflow, a human remains in control: planning, providing feedback, and iterating manually.

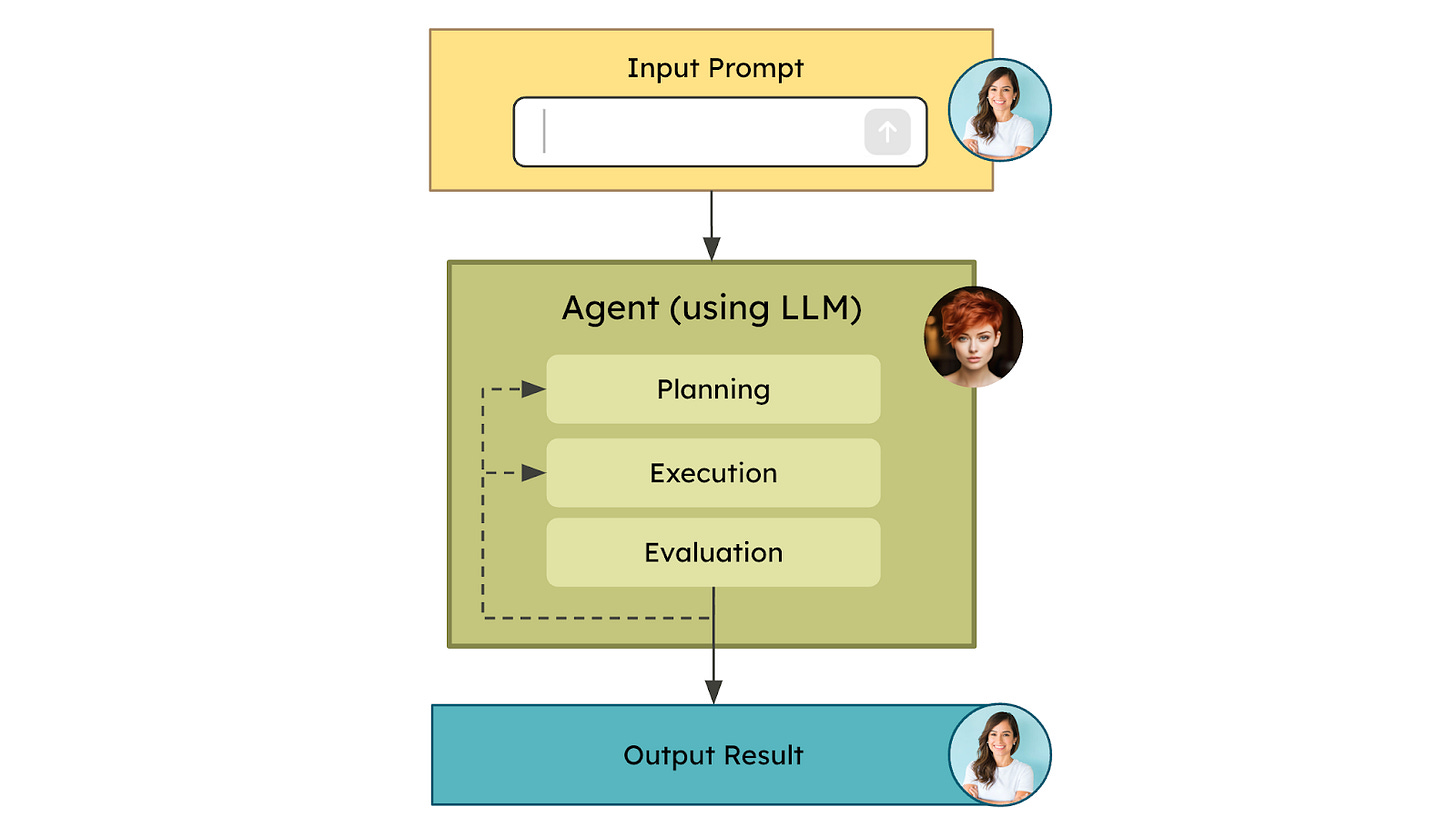

By contrast, the ultimate agentic workflow is a fully autonomous system that can independently create a plan, execute it, evaluate responses, and iterate as needed—the human is kept out of the loop.

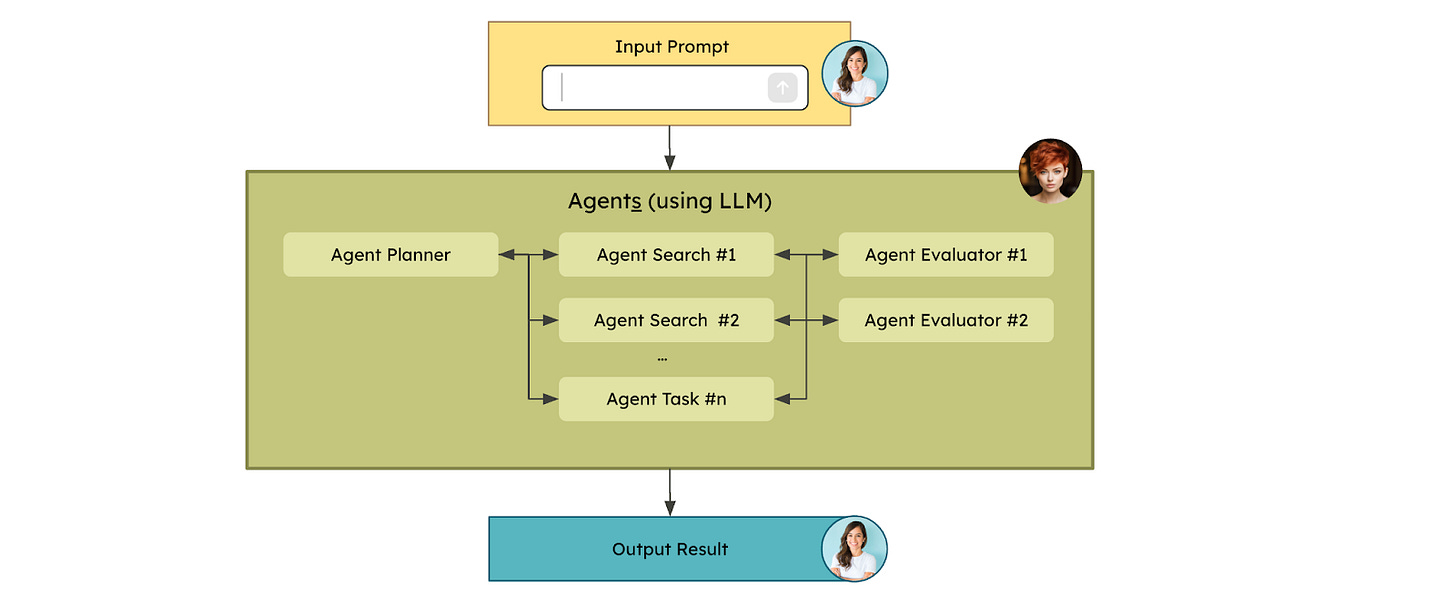

The potential here is vast, and so can be the complexity. Imagine deploying multiple agents, each with specialized skills: one is a “master agent” who excels at creating and coordinating the plan, another finds travel options, a third evaluates whether criteria are met, and so on.

Just as marketing evolved from single-channel to multi-channel to omni-channel, the future of AI points toward omni-agents!

Back in today’s reality, one of the most talked-about agentic workflows in the enterprise world is the concept of a customer service agent. These systems are envisioned to hold conversations and resolve customer issues autonomously, e.g. without human intervention. Unlike today’s fixed-option button-driven systems (we’ve all been frustrated clicking through dead-end menus), these probabilistic agentic systems promise unparalleled flexibility and power.

Use cases go far beyond customer service. Take Devin’s demo from a few months ago, which gained significant attention for showcasing an agent capable of autonomously developing software, identifying coding issues, and fixing them—all without human intervention.

The Reality Check: Challenges in Building Trustworthy Agents

So, agents sound amazing, right? Every customer service and engineering job is now at risk? Not so fast. Despite their promises, these systems remain extremely complex to develop—largely because of their unpredictability and difficulty to control. Consider the headlines: Air Canada’s chatbot providing incorrect recommendations to a customer or Chevrolet’s agent unconditionally agreeing to sell a Chevy for $1.

I respect these companies for being early adopters. But let’s face it—they likely underestimated the risks and challenges that come with embracing such cutting-edge technology.

Beyond performance, cost is another tricky aspect to manage. Every self-iteration an AI agent performs uses more tokens—a fundamental unit of cost in LLM usage. And the more tokens you consume, the higher the bill climbs.

2025: The Year of Talking and Developing AI Agents, But Not Trusting Them Yet

While the concept of an agent is easy to understand, the non-deterministic nature of agentic solutions—meaning their inherent unpredictability—makes them incredibly complex to build, evaluate, and, most importantly, trust.

There’s no denying the excitement surrounding the potential of AI agents. But just as I wasn’t confident that most organizations were ready to move their first GenAI use cases into production in 2024, I wouldn’t bet on agents being fully deployed across industries within the next 12 months. That said, I firmly believe agents will dominate the conversation this year, providing plenty of opportunities for experimentation and learning.

🔑 As we navigate this learning curve, semi-agentic workflows—those that keep humans in the loop—will already deliver significant efficiency gains. It’s a practical and powerful step forward while we continue to figure out how to make fully autonomous agents reliable and trustworthy.