Small, Large, Specialized: The Evolution of GenAI Models

Would you use the same book to find tonight’s dinner recipe and to learn how to code in Python?

Yet, consumers—and developers!—around the world have been using the same models for widely different needs.

How did we get here, and where is all this going?

Today: The Size Matters

A recent interview with Aidan Gomez, CEO co-founder of Cohere, describes how big of a bet it was to test a theory: models will get better as they get larger.

“I think what it required to see the future was perhaps an irrational conviction in this idea of scale. If we make these models larger, if we train them on more data, if we give them more capacity, more computing power, they are going to continue to get smarter and it’s not going to slow down. (...) It was an extremely non-obvious bet to pour capital into scaling.”

Aidan Gomez, CEO and co-founder of Cohere

At the time, the cost of testing this theory prevented even the largest private organizations, such as Google, from confirming this hypothesis.

Researchers finally were able to make the bet and received signals that confirmed how true the hypothesis could be. The general public discovered it via the release of ChatGPT by OpenAI in November 2022.

For the past few years, the strategy has mostly been about improving models by throwing more data at the problem. The parallel with Moore’s Law is quickly made.

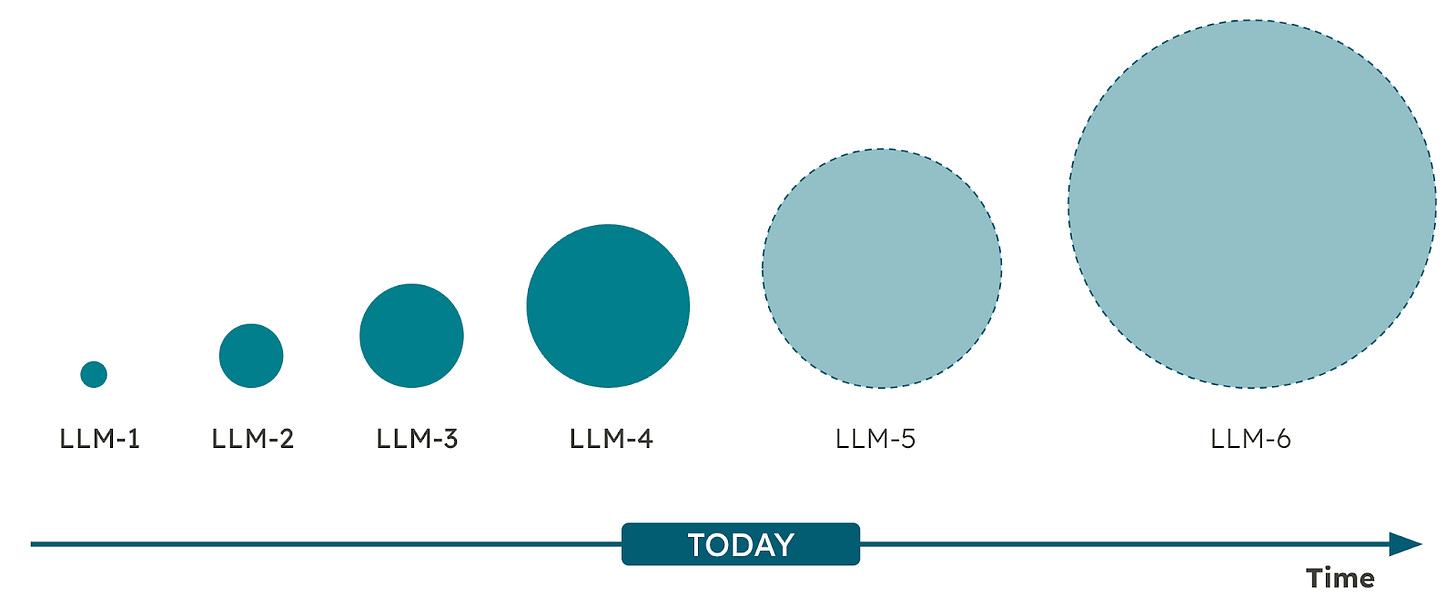

New models released growing over time

Taking the example of OpenAI, we can see the rapid evolution of their GPT model size:

GPT-1: 0.117B Parameters (June 2018)

GPT-2: 1.5B Parameters (February 2019)

GPT-3: 175B Parameters (May 2020)

GPT-4: 1.76T Parameters (Estimate, March 2023)

Future: Specialization

Over the next few years, we could very well see more of the same strategy where vendors will continue growing their models:

With its latest GPT-4o, OpenAI keeps pushing the limits with a single model. There are suggestions that its GPT-5 model could be a 52 trillion parameters model! (Source: Life Architect). This prediction makes perfect sense if we expect OpenAI to continue growing its model exponentially! At least, that’s what they’ve achieved so far.

As model size goes up, so does performance—awesome, right?! However, as the model size increases, so do:

The cost to train these models

The cost to use these models

The model latency

The speed at which model costs are increasing is not sustainable. Databricks shared that their DBRX model, with 132B parameters, cost $10 million to build and train.

So, what is the alternative? Just as we don’t have a single book answering every question—covering both food recipes and Python development tips—we shouldn’t expect to keep using one LLM for everything.

Instead, we will start seeing an explosion of models of different sizes and specializations.

If we take a look at the market, this has already started to happen.

Anthropic released not one but three models with Claude 3 in March, 2024 (exact parameter numbers were not disclosed):

Haiku: ~20B parameters

Sonnet: ~70B parameters

Opus: ~2T parameters

Beyond the model size with its Command and Command R models, Cohere is also providing specialized models to build your RAG architecture:

Embed models available for english and multimodal

Rerank models available for english and multimodal

Finally, Mistral gained rapid fame with their smaller models and recently released a code generation model called Codestral.

GenAI Models Will Grow Smaller and More Specialized

(Very) large models will continue to be built and serve as a base to create smaller, more specialized models.

🔑 No organization will deploy a single LLM across every business unit and use case. Instead, companies will pick and choose their—smaller and more specialized—models for each specific need.

This shift will create new challenges in terms of evaluating and operationalizing these models. We are just at the beginning of this AI revolution within enterprises!